For optimal reading, please switch to desktop mode.

InfiniBand networks are commonplace in the High Performance Computing (HPC) sector, but less so in cloud. As the two sectors converge, InfiniBand support becomes important for OpenStack. This article covers our recent work integrating InfiniBand networks with OpenStack and bare metal compute.

InfiniBand

InfiniBand (IB) is a standardised network interconnect technology frequently used in High Performance Computing (HPC), due to its high throughput and low latency, and intrinsic support for Remote Direct Memory Access (RDMA) based communication.

After initial competition in the IB market, only one vendor remains - Mellanox. Despite this, IB remains a popular choice for HPC and storage clusters.

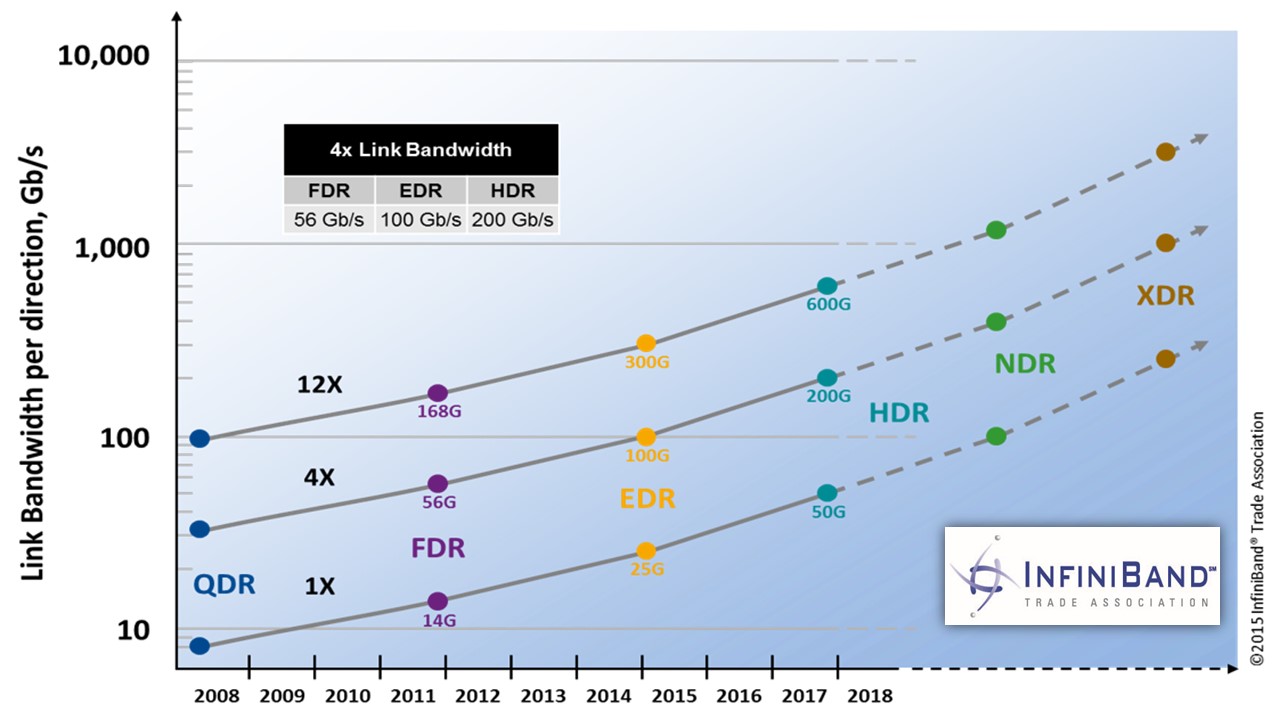

This slightly old roadmap from the InfiniBand Trade Association shows the data rates of historical and future InfiniBand standards.

Unmanaged IB

Integration of Mellanox IB and OpenStack is documented for virtualised compute, but support for bare metal compute with Ironic is listed as TBD.

The simplest solution to that TBD is to treat the IB network as an unmanaged resource, outside of OpenStack's control, and give instances unrestricted access to the network. There are a couple of drawbacks to this approach. First, there is no isolation between different users of the system, since all users share a single partition. Second, IP addresses on the IB network must be managed outside of Neutron and configured manually.

Mellanox IB and Virtualised Compute

Before we look at IB for bare metal compute, let's look at the (relatively) well trodden path - virtualised compute. Mellanox provides a Neutron plugin with two ML2 mechanism drivers that allow Mellanox ConnectX-[3-5] NICs to be plugged into VMs via SR-IOV. IB partitions are treated as VLANs as far as Neutron is concerned.

The first driver, mlnx_infiniband, binds to IB ports. The companion agent, neutron-mlnx-agent, runs on the compute nodes and manages their NICs, their SR-IOV Virtual Functions (VFs), and ensures they are members of the correct IB partitions.

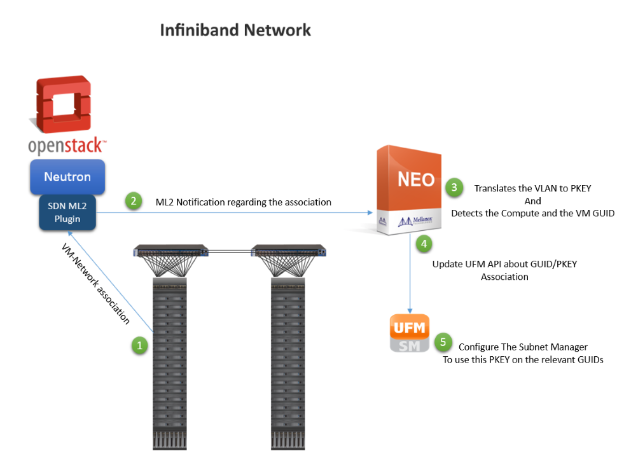

The second driver, mlnx_sdn_assist, programs the InfiniBand subnet manager to ensure that compute nodes are members of the required partitions. This may sound simple, but actually involves a chain of services. Mellanox NEO is an SDN controller, Mellanox UFM is an InfiniBand fabric manager, and OpenSM is an open source InfiniBand subnet manager. This driver is optional since neutron-mlnx-agent manages partition membership on the compute nodes, but does add an extra layer of security.

Connectivity to IB partitions from the Open vSwitch bridges on the Neutron network host is made possible via Mellanox's eIPoIB kernel module, provided with OFED, which allows Ethernet to be tunnelled over an InfiniBand network. This allows Neutron to provide DHCP and L3 routing services.

eIPoIB ContraBand?

As of the 4.0 release, Mellanox OFED no longer provides the eIPoIB kernel module that is required for Neutron DHCP and L3 routing. No official solution to this problem is provided, nor is it mentioned in the documentation on the wiki. The OFED software is fairly tightly coupled to a particular kernel, so using an older release of OFED is typically not an option when using a recent OS.

Mellanox IB and Bare Metal Compute

How do we translate this to the world of bare metal compute?

The Ironic InfiniBand specification provides us with a few pointers. The specification includes two principal changes:

- inspection of InfiniBand devices using Ironic Inspector

- PXE booting compute nodes using InfiniBand devices

Inspection is necessary, but we don't need to PXE boot over IB, since both of our clients' systems have Ethernet networks as well.

Comparing with the solution for virtualised compute, we don't need SR-IOV since compute instances have full control over their hardware. We also don't need the mlnx_infiniband ML2 driver and associated neutron-mlnx-agent, since we can't run an agent on the compute instance. The mlnx_sdn_assist driver is required, as the only way to enforce partition membership for a bare metal compute node is via the subnet manager.

Ironic port physical network tags, are applied during hardware inspection, and can be used to ensure a correct mapping between Neutron ports and Ironic ports in the presence of multiple networks.

Addressing

A quick diversion into addressing of InfiniBand in OpenStack. An InfiniBand GUID is a 64 bit identifier, composed of a 24 bit manufacturer prefix followed by a 40 bit device identifier. In Ironic, ports are registered with an Ethernet-style 48 bit address, by concatenating the first and last 24 bits of the GUID. For example:

The Mellanox hardware manager in Ironic Python Agent (IPA) registers a client ID for InfiniBand ports that is used for DHCP, and also within Ironic for classifying a port as InfiniBand. The client ID is formed by concatenating a vendor-specific prefix with the port's GUID. For example, for a Mellanox ConnectX family NIC:

If using IP over InfiniBand (IPoIB), the IPoIB device has a 20 byte hardware address which is formed by concatenating 4 bytes of flags, 8 bytes of subnet identifier, and the 8 byte port GUID. For example:

ALaSKA

Our first bare metal IB project, the SKA's ALaSKA system, is a shared computing resource, and does not have strict project isolation requirements. We therefore decided to treat the IB network as a 'flat' network, without partitions. This avoids the requirement for Mellanox NEO and UFM, and the associated software license. Instead, we run an OpenSM subnet manager on the OpenStack controller.

In order for Neutron to bind to the IB ports, we still use the mlnx_sdn_assist mechanism driver. We developed a patch for the driver that allows us to configure the driver not to forward configuration to NEO. We're working on pushing this patch upstream.

The ALaSKA controller runs CentOS 7.4, which means that we need to use Mellanox OFED 4.1+. As mentioned previously, this prevents us from using eIPoIB, the prescribed method for providing Neutron DHCP and L3 routing services on InfiniBand.

Config Drive to the Rescue

Thankfully, Stig (our CTO) came to the rescue with his suggestion of using a config drive to configure IP addresses on the IB network. If a Neutron network has enable_dhcp set to False, then Nova will populate a file, network_data.json, in the config drive with IP configuration for the instance.

This works because we don't need to PXE boot via the IB network. We still don't get L3 routing, but that isn't a requirement for our current use cases.

Cloud-init

Config drives are typically processed at boot by a service such as cloud-init, prior to configuring network interfaces. One thing we need to ensure is that the required kernel modules (ib_ipoib and mlx4_ib or mlx5_ib) are loaded prior to processing the config drive. For this we use systemd's /etc/modules-load.d/ mechanism, and have built a Diskimage Builder (DIB) element that injects configuration for this into user images.

Problem sorted? Sadly not. Due to the discrepancy between the 20 byte IPoIB hardware address and the 6 byte Ethernet style address in the config drive, cloud-init is not able to determine which interface to configure. We developed a patch for cloud-init that resolves this dual identity issue.

Zooming Out

Putting all of this together, from a user's perspective, we have:

- a new Neutron network that user instances can attach to, in addition to the two existing Ethernet networks

- updated user images that include the kernel module loading config and patched cloud-init package

- a requirement for users to set config-drive=True when creating instances

This will lead to instances with an IP address on their IPoIB interface.

Verne Global - Adding Multitenancy

Verne Global operate hpcDIRECT, a ground breaking HPC-as-a-Service cloud based on OpenStack. hpcDIRECT inherently requires strict tenant isolation requirements. We therefore added two Mellanox services to the setup - NEO and UFM - to provide isolation between projects via InfiniBand partitions.

NEO & UFM

The hpcDIRECT control plane is deployed in containers via Kolla Ansible, and the team at Verne Global were keen to ensure the benefits of containerisation extend to NEO and UFM. To this end, we created a set of image recipes and Ansible deployment roles:

- NEO image and deployment role

- UFM image and deployment role

These tools have been integrated into the hpcDIRECT Kayobe configuration as an extension to the standard deployment workflow.

Neither service would be classed as a typical container workload - the containers run systemd and several services each. We also require host networking for access to the InfiniBand network. Still, containers allow us to decouple these services from the host OS, and other services running on the same host.

The setup did not work initially, but after receiving a patched version of NEO from the team at Mellanox, we were on our way.

NEO and UFM allow some ports to be configured, but both seem to require the use of ports 80 and 443. These are popular ports, and it's easy to hit a conflict with another service such as Horizon.

Fabric Visibility

In an isolated environment, compute nodes should only have visibility into the partitions of which they are a member. This principle should also apply to low-level InfiniBand fabric tools such as ibnetdiscover.

Isolation at this layer requires the Secure Host feature of Mellanox ConnectX NICs.

A Fellow Traveller

The solution presented here is based on work done recently by the Mellanox team working with Jacob Anders from CSIRO in Australia.

You can see the presentation Jacob made with Moshe and Erez from Mellanox at the OpenStack summit in Vancouver:

Summary

After integrating a Mellanox InfiniBand network with OpenStack and bare metal compute for two clients, it's clear there are significant obstacles - not least the removal of eIPoIB from OFED without a replacement solution. Despite this, we were able to satisfy the requirements at each site. As Ironic gains popularity, particularly for Scientific Computing, we expect to see more deployments of this kind.

Thanks to Moshe Levi from Mellanox for helping us to piece together some of the missing pieces of the puzzle.