For optimal reading, please switch to desktop mode.

This post is the second in a series on HPC networking in OpenStack. In the series we'll discuss StackHPC's current and future work on integrating OpenStack with high performance network technologies. This post discusses how the Kayobe project uses Ansible to define physical and virtual network infrastructure as code.

If you've not read it yet, why not begin with the first post in this series.

The Network as Code

Operating a network has for a long time been a difficult and high risk task. Networks can be fragile, and the consequences of incorrect configuration can be far reaching. Automation using scripts allows us to improve on this, reaping some of the benefits of established software development practices such as version control.

The recent influx of DevOps and configuration management tools are well suited to the task of network management, with Ansible in particular having a large selection of modules for configuration of network devices. Of course, the network doesn't end at the switch, and Ansible is equally well suited to driving network configuration on the attached hosts - from simple interface configuration to complex virtual networking topologies.

OpenStack Networks

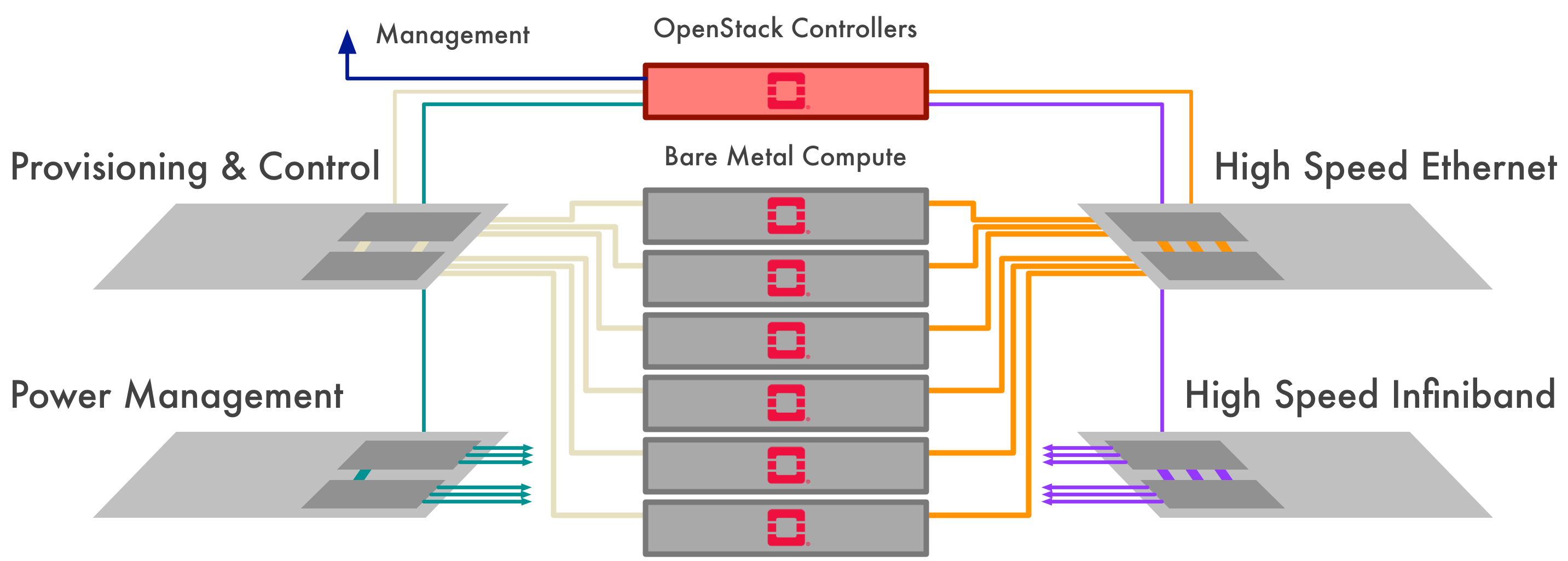

OpenStack can be deployed in a number of configurations, with various levels of networking complexity. Some of the classes of networks that may be used in an OpenStack cluster include:

- Power & out-of-band management network

- Access to power management devices and out-of-band management systems (e.g. BMCs) of control and compute plane hosts.

- Overcloud provisioning network

- Used by the seed host to provision the control plane and virtualised compute plane hosts.

- Workload inspection network

- Used by the control plane hosts to inspect the hardware of the bare metal compute hosts.

- Workload provisioning network

- Used by the control plane hosts to provision the bare metal compute hosts.

- Workload cleaning network

- Used by the control plane hosts to clean the bare metal compute hosts after use.

- Internal network

- Used by the control plane for internal communication and access to the internal and admin OpenStack API endpoints.

- External network

- Hosts the public OpenStack API endpoints and provides external network access for the hosts in the system.

- Tenant networks

- Used by tenants for communication between compute instances. Multiple networks can provide isolation between tenants. These may be overlay networks such as GRE or VXLAN tunnels but are more commonly VLANs in bare metal compute environments.

- Storage network

- Used by control and compute plane hosts for access to storage systems.

- Storage management network

- Used by storage systems for internal communication.

Hey wait, where are you going? Don't worry, not all clusters require all of these classes of networks, and in general it's possible to map more than one of these to a single virtual or physical network.

Kayobe & Kolla-ansible

Kayobe heavily leverages the Kolla-ansible project to deploy a containerised OpenStack control plane. In general, Kolla-ansbile performs very little direct configuration of the hosts that it manages - most is limited to Docker containers and volumes. This leads to a very reliable and portable tool, but does leave wide open the question of how to configure the underlying hosts to the point where they can run Kolla-ansible's containers. This is where Kayobe comes in.

Host networking

Kolla-ansible takes as input the names of network interfaces that map to the various classes of network that it differentiates. The following variables should be set in globals.yml.

# Internal network

api_interface: br-eth1

# External network

kolla_external_vip_interface: br-eth1

# Storage network

storage_interface: eth2

# Storage management network

cluster_interface: eth2

In this example we have two physical interfaces, eth1 and eth2. A software bridge br-eth1 exists, into which eth1 is plugged. Kolla-ansible expects these interfaces to be up and configured with an IP address.

Rather than reinventing the wheel, Kayobe makes use of existing Ansible roles available on Ansible Galaxy. Galaxy can be a bit of a wild west, with many overlapping and unmaintained roles of dubious quality. That said, with a little perseverance it's possible to find good quality roles such as MichaelRigart.interfaces. Kayobe uses this role to configure network interfaces, bridges, and IP routes on the control plane hosts.

Here's an example of a simple Ansible playbook that uses the MichaelRigart.interfaces role to configure eth2 for DHCP, and a bridge breth1 with a static IP address, static IP route and a single port, eth1.

- name: Ensure network interfaces are configured

hosts: localhost

become: yes

roles:

- role: MichaelRigart.interfaces

# List of Ethernet interfaces to configure.

interfaces_ether_interfaces:

- device: eth2

bootproto: dhcp

# List of bridge interfaces to configure.

interfaces_bridge_interfaces:

- device: breth1

bootproto: static

address: 192.168.1.150

netmask: 255.255.255.0

gateway: 192.168.1.1

mtu: 1500

route:

- network: 192.168.200.0

netmask: 255.255.255.0

gateway: 192.168.1.1

ports:

- eth1

If you are following along at home, ensure that Ansible maintains a stable control connection to the hosts being configured, and that this connection is not reconfigured by the MichaelRigart.interfaces role.

Kayobe uses Open vSwitch as the Neutron ML2 mechanism for providing network services such as DHCP and routing on the control plane hosts. Kolla-ansible deploys a containerised Open vSwitch daemon, and creates OVS bridges for Neutron which are attached to existing network interfaces. Kayobe creates virtual Ethernet pairs using its veth role, and configures Kolla-ansible to connect the OVS bridges to these.

A quick namecheck of other Galaxy roles used by Kayobe for host network configuration: ahuffman.resolv configures the DNS resolver, and resmo.ntp configures the NTP daemon. Thanks go to the maintainers of these roles.

The bigger picture

The previous examples show how one might configure a set of network interfaces on a single host, but how can we extend that configuration to cover multiple hosts in a cluster in a declarative manner, without unnecessary repetition? Ansible's combination of YAML and Jinja2 templating turns out to be great at this.

A network in Kayobe is assigned a name, which is used as a prefix for all variables that describe the network's attributes. Here's the global configuration for a hypothetical example network that would typically be added to Kayobe's networks.yml configuration file.

# Definition of 'example' network.

example_cidr: 10.0.0.0/24

example_gateway: 10.0.0.1

example_allocation_pool_start: 10.0.0.3

example_allocation_pool_end: 10.0.0.127

example_vlan: 42

example_mtu: 1500

example_routes:

- cidr: 10.1.0.0/24

gateway: 10.0.0.2

Defining each option as a top level variable allows them to be overridden individually, if necessary.

We define the network's IP subnet, VLAN, IP routes, MTU, and a pool of IP addresses for Kayobe to assign to the control plane hosts. Static IP addresses are allocated automatically using Kayobe's ip-allocation role, but may be manually defined by pre-populating network-allocation.yml.

There are also some per-host configuration items that allow us to define how hosts attach to networks. These would typically be added to a group or host variable file.

# Definition of network interface for 'example' network.

example_interface: breth1

example_bridge_ports:

- eth1

Kayobe defines classes of networks which can be mapped to the actual networks that have been configured. In our example, we may want to use the example network for both internal and external control plane communication. We would then typically define the following in networks.yml.

# Map internal network communication to 'example' network.

internal_net_name: example

# Map external network communication to 'example' network.

external_net_name: example

These network classes are used to determine to which networks each host should be attached, and how to configure Kolla-ansible. The default network list may be extended if necessary by setting controller_extra_network_interfaces in controllers.yml.

The final piece of this puzzle is a set of custom Jinja2 filters that allow us to query various attributes of these networks, using the name of the network.

# Get the MTU for the 'example' network.

example_mtu: "{{ 'example' | net_mtu }}"

We can also query attributes of other hosts.

# Get the network interface for the 'example' network on host 'controller1'.

example_interface: "{{ 'example' | net_interface('controller1') }}"

Finally, we can remove the explicit reference to our site-specific network name, example.

# Get the CIDR for the internal network.

internal_cidr: "{{ internal_net_name | net_interface }}"

The decoupling of network definitions from network classes enables Kayobe to be very flexible in how it configures a cluster. In our experience this is an area in which TripleO is a little rigid.

Further information on configuration of networks can be found in the Kayobe documentation.

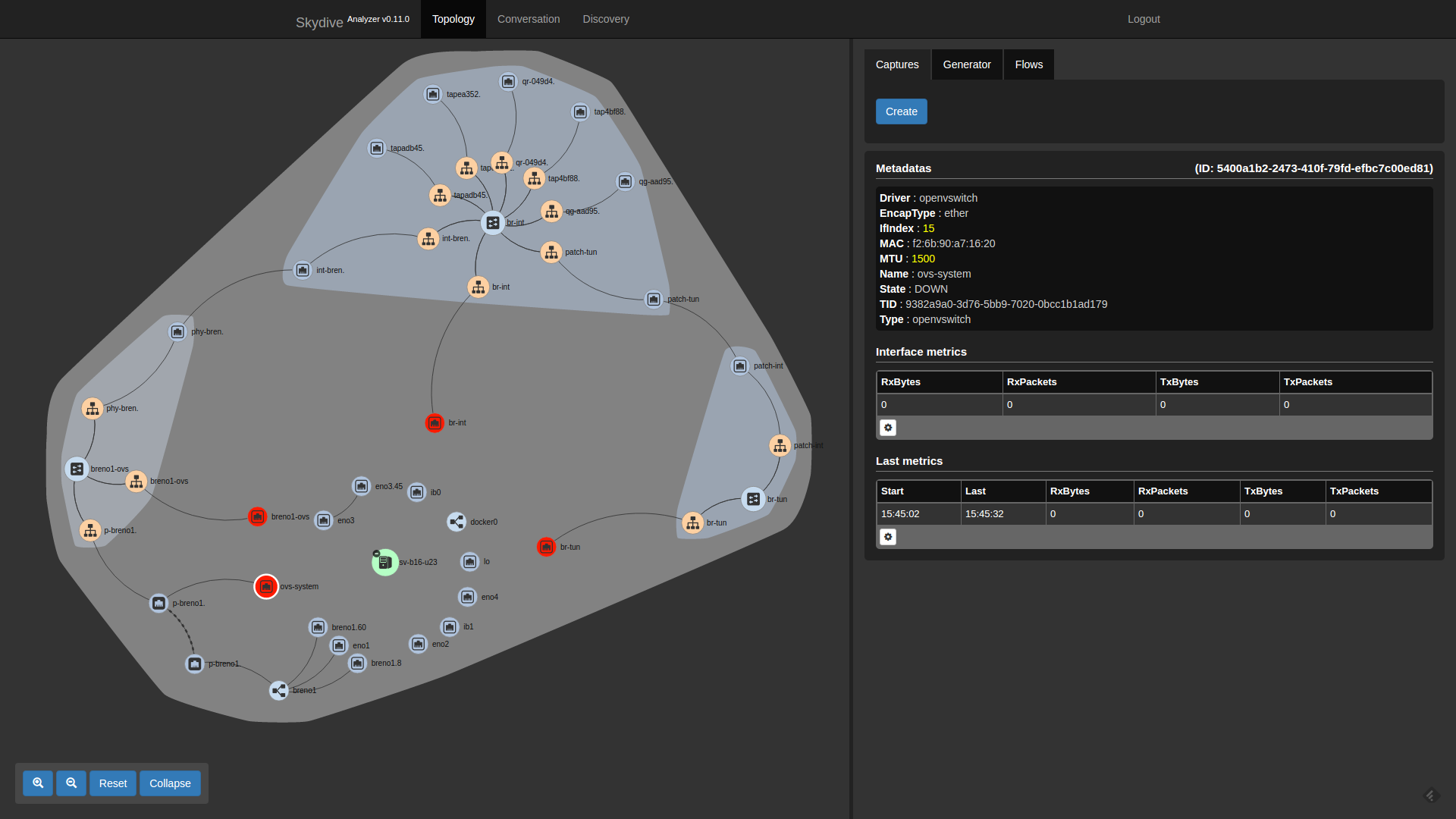

The Kayobe configuration for the Square Kilometre Array (SKA) Performance Prototype Platform (P3) system provides a good example of how this works in a real system. We used the Skydive project to visualise the network topology within one of the OpenStack controllers in the P3 system. In the Pike release, Kolla-ansible adds support for deploying Skydive on the control plane and virtualised compute hosts. We had to make a small change to Skydive to fix discovery of the relationship between VLAN interfaces and their parent link, and we'll contribute this upstream. Click the image link to see it in its full glory.

Physical networking

Hosts are of little use without properly configured network devices to connect them. Kayobe has the capability to manage the configuration of physical network switches using Ansible's network modules. Currently Dell OS6 and Dell OS9 switches are supported, while Juniper switches will be soon be added to the list.

Returning to our example of the SKA P3 system, we note that in HPC clusters it is common to need to manage multiple physical networks.

Each switch is configured as a host in the Ansible inventory, with host variables used to specify the switch's management IP address and admin user credentials.

ansible_host: 10.0.0.200

ansible_user: <admin username>

ansible_ssh_password: ******

Kayobe doesn't currently provide a lot of abstraction around switch configuration - it is specified using three per-host variables.

# Type of switch. One of 'dellos6', 'dellos9'.

switch_type: dellos6

# Global configuration. List of global configuration lines.

switch_config:

- "ip ssh server"

- "hostname \"{{ inventory_hostname }}\""

# Interface configuration. Dict mapping switch interface names to configuration

# dicts. Each dict contains a 'description' item and a 'config' item which should

# contain a list of per-interface configuration.

switch_interface_config:

Gi1/0/1:

description: controller1

config:

- "switchport mode access"

- "switchport access vlan {{ internal_net_name | net_vlan }}"

- "lldp transmit-mgmt"

Gi1/0/2:

description: compute1

config:

- "switchport mode access"

- "switchport access vlan {{ internal_net_name | net_vlan }}"

- "lldp transmit-mgmt"

In this example we define the type of the switch as dellos6 to instruct Kayobe to use the dellos6 Ansible modules. Note the use of the custom filters seen earlier to limit the proliferation of the internal VLAN ID throughout the configuration. The Kayobe dell-switch role applies the configuration to the switches when following command is run:

kayobe physical network configure --group <group name>

The group defines the set of switches to be configured.

Once more, the P3 system's Kayobe configuration provides some good examples. In particular, check out the configuration for one of the management switches, and the associated group variables.

Next Time

In the next article in this series we'll look at how StackHPC is working upstream on improvements to networking in the Ironic and Neutron Networking Generic Switch ML2 mechanism driver projects.