For optimal reading, please switch to desktop mode.

How long does it take a team to bring up new hardware for private cloud? Despite long days at the data centre, why does it always seem to take longer than initial expectations? The commissioning of new hardware is tedious, with much unnecessary operator toil.

The scientific computing sector is already well served by tools for streamlining this process. The commercial product Bright Cluster Manager and open-source project xCAT (originally from IBM) are good examples. The OpenStack ecosystem can learn a lot from the approaches taken by these packages, and some of the gaps in what OpenStack Ironic can do have been painfully inconvenient when using projects such as TripleO and Bifrost at scale.

This post covers how this landscape is changing. Using new capabilities in OpenStack's Ironic Inspector, and new support for manipulating network switches using Ansible, we can build a system for zero-touch provisioning using Ironic and Ansible together to bring zero-touch provisioning to OpenStack private clouds.

Provision This...

Recently we have been working on a performance prototyping platform for the SKA telescope. In a nutshell, this project aims to identify promising technologies to pick up and run with as the SKA development ramps up over the next few years. The system features a number of compute nodes with more exotic hardware configurations for the SKA scientists to explore.

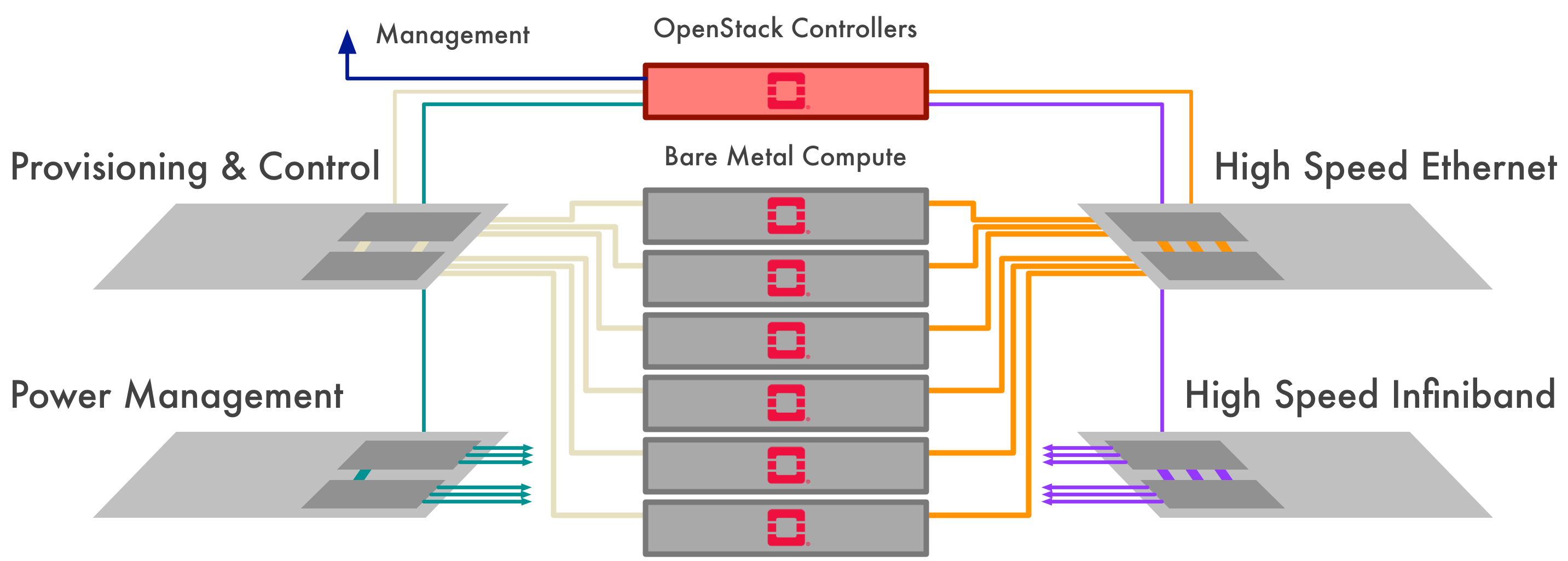

This system uses Dell R630 compute nodes, running as bare metal, managed using OpenStack Ironic, with an OpenStack control plane deployed using Kolla.

The system has a number of networks that must be managed effectively by OpenStack, without incurring any performance overhead. All nodes have rich network connectivity - something which has been a problem for Ironic, and which we are also working on.

- Power Management. Ironic requires access to the compute server baseboard management controllers (BMCs). This enables Ironic to power nodes on and off, access serial consoles and reconfigure BIOS and RAID settings.

- Provisioning and Control. When a bare metal compute node is being provisioned, Ironic uses this network interface to network-boot the compute node, and transfer the instance software image to the compute node's disk. When a compute node has been deployed and is active, this network is configured as the primary network for external access to the instance.

- High Speed Ethernet. This network will be used for modelling the high-bandwidth data feeds being delivered from the telescope's Central Signal Processor (CSP). Some Ethernet-centric storage technologies will also use this network.

- High Speed Infiniband. This network will be reserved for low-latency, high-bandwidth messaging, either between tightly-coupled compute or compute that is tightly coupled to storage.

Automagic Provisioning Using xCAT

Before we dive in to the OpenStack details, lets make a quick detour with an overview of how xCAT performs what it calls "Automagic provisioning".

- This technique only works if your hardware attempts a PXE boot in its factory default configuration. If it doesn't, well that's unfortunate!

- We start with the server hardware racked and cabled up to the provisioning network switches. The servers don't need configuring - that is automatically done later.

- The provisioning network must be configured with management access and SNMP read access enabled. The required VLAN state must be configured on the server access ports. The VLAN has to be isolated for the exclusive use of xCAT provisioning.

- xCAT is configured with addresses and credentials for SNMP access to the provisioning network switches. A physical network topology must also be defined in xCAT, which associates switches and ports with connected servers. At this point, this is all xCAT knows about a server: that it is an object attached to a given network port.

- The server is powered on (OK, this is manual; perhaps "zero-touch" is an exaggeration...), and performs a PXE boot. For a DHCP request from an unidentified MAC, xCAT will provide a generic introspection image for PXE-boot.

- xCAT uses SNMP to trace the request to a switch and network port. If this network port is in xCAT's database, the server object associated with the port is populated with introspection details (such as the MAC address).

- At this stage, the server awaits further instructions. Commissioning new hardware may involve firmware upgrades, in-band BIOS configuration, BMC credentials, etc. These are performed using site-specific operations at this point.

We have had some experience of various different approaches - Bright Cluster Manager, xCAT, TripleO and OpenStack Ironic. This gives us a pretty good idea of what is possible, and the benefits and weaknesses of each. As an example, the xCAT flow offers many advantages over a manual approach to hardware commissioning - once it is set up. Some cloud-centric infrastructure management techniques can be applied to simplify that process.

An Ironic Inspector Calls

Here's how we put together a system, built around Ironic, for streamlined infrastructure commissioning using OpenStack tools. We've collected together our Ansible playbooks and supporting scripts as part of our new Kolla-based OpenStack deployment project, Kayobe.

One principal difference with the xCAT workflow is that Ironic's Inspector does not make modifications to server state, and by default does not keep the introspection ramdisk active after introspection or enable an SSH login environment. This prevents us from using xCAT's technique of invoking custom commands to perform site-specific commissioning actions. We'll cover how those actions are achieved below.

- We use Ansible network modules to configure the management switches for the Provisioning and Control network. In this case, they are Dell S6010-ON network-booting switches, and we have configured Ironic Inspector's dnsmasq server to boot them with Dell/Force10 OS9. We use the Dellos9 Ansible module

- Using tabulated YAML data mapping switch ports to compute hosts, Ansible configures the switches with port descriptions and membership of the provisioning VLAN for all the compute node access ports. Some other basic configuration is applied to set the switches up for operation, such as enabling LLDP and configuring trunk links.

- Ironic Inspector's dnsmasq service is configured to boot unrecognised MAC addresses (for servers not in the Ironic inventory) to perform introspection on those servers.

- The LLDP datagrams from the switch are received during introspection, including the switch port description label we assigned using Ansible.

- The introspection data gathered from those nodes is used to populate the Ironic inventory to register the new node using Inspector's discovery capabilities.

- With Ironic Inspector's rule-based transformations, we can populate the server's state in Ironic with BMC credentials, deployment image IDs and other site-specific information. We name the nodes using a rule that extracts the switch port description received via LLDP.

So far, so good, but there's a catch...

Dell OS9 and LLDP

Early development was done on other switches, including simpler beasts running Dell Network OS6. It appears that Dell Network OS9 does not yet support some simple-but-critical features we had taken for granted for the cross-referencing of switch ports with servers. Specifically, Dell Network OS9 does not support transmitting LLDP's port description TLV that we were assigning using Ansible network modules.

To work around this we decided to fall back to the same method used in xCAT: we match using switch address and port ID. To do this, we create Inspector rules for matching each port and performing the appropriate assignment. And with that, the show rolls on.

Update: Since the publication of this article, newer versions of Dell Networking OS9 have gained the capability of advertising port descriptions, making this workaround unnecessary. For S6010-ON switches, this is available since version 9.11(2.0P1) using the advertise interface-port-desc description LLDP configuration.

Ironic's Catch-22

Dell's defaults for the iDRAC BMC assign a default IP address and credentials. All BMC ports on the Power Management network begin with the same IP. IPMI is initially disabled, allowing access only through the WSMAN protocol used by the idracadm client. In order for Ironic to manage these nodes, their BMCs each need a unique IP address on the Power Management subnet.

Ironic Inspection is designed to be a read-only process. While the IPA ramdisk can discover the IP address of a server's BMC, there is currently no mechanism for setting the IP address of a newly discovered node's BMC.

Our solution involves more use of the Ansible network modules.

- Before inspection takes place we traverse our port-mapping YAML tables, putting the network port of each new server's BMC in turn into a dedicated commissioning VLAN.

- Within the commissioning VLAN, the default IP address can be addressed in isolation. We connect to the BMC via idracadm, assign it the required IP address, and enable IPMI.

- The network port for this BMC is reverted to the Power Management VLAN.

At this point the nodes are ready to be inspected. The BMCs' new IP addresses will be discovered by Inspector and used to populate the nodes' driver info fields in Ironic.

Automate all the Switches

The configuration of a network, when applied manually, can quickly become complex and poorly understood. Even simple CLI-based automation like that used by the dellos Ansible network modules can help to grow confidence in making changes to a system, without the complexity of an SDN controller.

Some modern switches such as the Dell S6010-ON support network booting an Operating System image. Kayobe's dell-switch-bmp role configures a network boot environment for these switches in a Kolla-ansible managed Bifrost container.

Once booted, these switches need to be configured. We developed the simple dell-switch role to configure the required global and per-interface options.

Switch configuration is codified as Ansible host variables (host_vars) for each switch. The following is an excerpt from one of our switch's host variables files:

# Host/IP on which to access the switch via SSH.

ansible_host: <switch IP>

# Interface configuration.

switch_interface_config:

Te1/1/1:

description: compute-1

config: "{{ switch_interface_config_all }}"

Te1/1/2:

description: compute-2

config: "{{ switch_interface_config_all }}"

As described previously, the interface description provides the necessary mapping from interface name to compute host. We reference the switch_interface_config_all variable which is kept in an Ansible group variables (group_vars) file to keep things DRY. The following snippet is taken from such a file:

# User to access the switch via SSH.

ansible_user: <username>

# Password to access the switch via SSH.

ansible_ssh_pass: <password>

# Interface configuration for interfaces with controllers or compute

# nodes attached.

switch_interface_config_all:

- "no shutdown"

- "switchport"

- "protocol lldp"

- " advertise dot3-tlv max-frame-size"

- " advertise management-tlv management-address system-description system-name"

- " advertise interface-port-desc"

- " no disable"

- " exit"

Interfaces attached to compute hosts are enabled as switchports and have several LLDP TLVs enabled to support inspection.

We wrap this up in a playbook and make it user-friendly through our CLI as the command kayobe physical network configure.

iDRAC Commissioning

The idrac-bootstrap.yml playbook used to commission the box-fresh iDRACs required some relatively complex task sequencing across multiple hosts using multiple plays and roles.

A key piece of the puzzle involves the use of an Ansible task file included multiple times using a with_dict loop, in a play targeted at the switches using serial: 1. This allows us to execute a set of tasks for each BMC in turn. A simplified example of this is shown in the playbook below:

- name: Execute multiple tasks for each interface on each switch serially

hosts: switches

serial: 1

tasks:

- name: Execute multiple tasks for an interface

include: task-file.yml

with_dict: "{{ switch_interface_config }}"

Here we reference the switch_interface_config variable seen previously. task-file.yml might look something like this:

- name: Display the name of the interface

debug:

var: item.key

- name: Display the description of the interface

debug:

var: item.value.description

This commissioning technique is clearly not perfect, having an execution time that scales linearly with the number of servers being commissioned. That said, it automated a labour intensive manual task on the critical path of our deployment in a relatively short space of time - about 20 seconds per node.

We think there is room for a solution that is more integrated with Ironic Inspector and would like to return to the problem before our next deployment.

Introspection Rules

Ironic Inspector's introspection rules API provides a flexible mechanism for processing the data returned from the introspection ramdisk that does not require any server-side code changes.

There is currently no upstream Ansible module for automating the creation of these rules. We developed the ironic-inspector-rules role to fill the gap and continue boldly into the land of Infrastructure-as-code. At the core of this role is the os_ironic_inspector_rule module which follows the patterns of the upstream os_* modules and provides us with an Ansible-compatible interface to the introspection rules API. The role ensures required python dependencies are installed and allows configuration of multiple rules.

With this role in place, we can define our required introspection rules as Ansible variables. For example, here is a rule definition used to update the BMC credentials of a newly discovered node:

# Ironic inspector rule to set IPMI credentials.

inspector_rule_ipmi_credentials:

description: "Set IPMI driver_info if no credentials"

conditions:

- field: "node://driver_info.ipmi_username"

op: "is-empty"

- field: "node://driver_info.ipmi_password"

op: "is-empty"

actions:

- action: "set-attribute"

path: "driver_info/ipmi_username"

value: "{{ inspector_rule_var_ipmi_username }}"

- action: "set-attribute"

path: "driver_info/ipmi_password"

value: "{{ inspector_rule_var_ipmi_password }}"

By adding a layer of indirection to the credentials, we can provide a rule template that is reusable between different systems. The username and password are then configured separately:

# IPMI username referenced by inspector rule.

inspector_rule_var_ipmi_username:

# IPMI password referenced by inspector rule.

inspector_rule_var_ipmi_password:

This pattern is commonly used in Ansible as it allows granular customisation without the need to redefine the entirety of a complex variable.

Acknowledgements

- With thanks to Matt Raso-Barnett from Cambridge University for a detailed overview of how xCAT zero-touch provisioning was used for deploying the compute resource for the Gaia project.